51. MLOps Basics – How AI models get deployed & monitored

Let’s break down the basics of MLOps in a way that’s relevant to SMBs. We’ll explore what exactly MLOps entails, how models are deployed and monitored, key tools you might consider (including accessible cloud platforms), and real-world examples of smaller companies benefiting from these practices. By the end, you should see MLOps not as a technical buzzword, but as a strategic enabler for AI adoption in your business – one that can be approached incrementally and cost-effectively.

Q1: FOUNDATIONS OF AI IN SME MANAGEMENT - CHAPTER 2 (DAYS 32–59): DATA & TECH READINESS

Gary Stoyanov PhD

2/20/202530 min read

1. What Exactly is MLOps? (In Business Terms)

MLOps is short for Machine Learning Operations. It’s often described as the intersection of machine learning, software DevOps, and data engineering. In simpler terms, MLOps is all about making your AI models “product-ready.” It’s the set of processes that ensure a model isn’t just accurate in a lab test, but is also deployable, scalable, auditable, and maintainable in a live environment. Think of it as the equivalent of a production line in manufacturing – it takes prototypes (ML models) and turns them into products (services or tools running within your business).

Key aspects of MLOps include:

Collaboration: It fosters teamwork between data scientists (who build the models), engineers/IT (who deploy and maintain them), and business stakeholders (who define the requirements and use the outputs). Everyone works together to align the model’s development with the operational needs and business goals.

Automation: Wherever possible, repetitive or complex steps are automated. For instance, instead of manually deploying a model every time it’s updated, an automated pipeline can do this after running tests. Automation means faster iterations and less room for human error.

Continuous Lifecycle: MLOps treats ML deployment as a continuous process, not a one-off project. Models might need updates when new data comes in or when conditions change. MLOps establishes a cycle of continuous integration, delivery, and training (CI/CD/CT in ML terms), so improvements can be rolled out regularly.

Governance and Monitoring: In industries with regulations or high risks (finance, healthcare, etc.), knowing what your model is doing is critical. MLOps includes tracking model versions, documenting data lineage (what data trained the model), and monitoring performance. This provides accountability and the ability to audit decisions made by AI, which is important for trust and compliance.

MLOps ensures that when you, as a business leader, green-light an AI project, it doesn’t stop at a proof-of-concept. Instead, it goes all the way to a deployed solution that your staff or customers can use reliably – and it stays useful through monitoring and maintenance.

2. Core Components of Deploying and Monitoring AI Models

To appreciate how MLOps works, let’s break down the core components involved in deploying and monitoring AI models. These components form an end-to-end pipeline – from the data that goes into developing a model, all the way to keeping an eye on the model’s outputs in production.

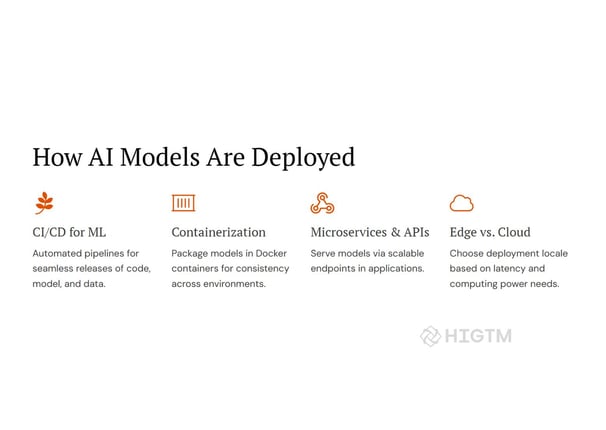

2.1 Model Deployment Pipeline

Deploying a model means taking it from the development environment (where it’s trained and tested) into the production environment (where it will actually be used). A robust deployment pipeline typically involves:

Model Packaging: The first step is packaging the model with all its dependencies (libraries, runtime, etc.) so it can run anywhere. Often, this is done using containers (like Docker). By containerizing an ML model, you ensure that the environment is the same on a developer’s laptop, on a test server, or in the cloud. This eliminates the “works on my machine” problem.

Testing & Validation: Before a model goes live, MLOps mandates thorough testing. This can include testing the model’s predictive performance on unseen data, testing for integration (does it work with the rest of the application?), and even A/B testing it against an older model to ensure it truly performs better. For example, if you had a previous churn prediction model, you might deploy the new model to a small percentage of users to compare outcomes before a full rollout.

Continuous Integration/Continuous Deployment (CI/CD): Borrowed from software engineering, CI/CD in MLOps automates the release process. Continuous Integration might involve automatically retraining a model when new data is available or when code changes, and running a battery of tests. If it passes, Continuous Deployment will push that new model version to production. This means updates can be delivered frequently and reliably. For instance, a small fintech startup could set up CI/CD so that every week their fraud detection model retrains on the latest data and, after validation, the updated model is deployed without manual intervention.

Infrastructure Management: Deployment also involves managing the infrastructure where the model runs. This could be a cloud service, on-premises servers, or edge devices. Infrastructure as Code (IaC) tools (like Terraform or CloudFormation) may be used so that your infrastructure (servers, networking, etc.) is defined in scripts – enabling reproducibility and easy adjustments. SMBs using cloud platforms can benefit here by scaling infrastructure up or down based on demand (saving cost during low usage times).

2.2 Model Serving and Inference

Once deployed, a model needs to be served – meaning there’s a way for end-users or systems to get predictions from it:

Real-time Serving: For applications that need instant predictions (e.g., a user clicks a product and you want immediate recommendations), models are served via APIs or microservices. The model sits behind an endpoint; whenever it’s called with input data, it returns a prediction. The MLOps platform ensures this service is always available, perhaps with multiple instances behind a load balancer for reliability.

Batch Serving: Not all use cases are real-time. Some predictions are done in batches (e.g., every night generate a report of tomorrow’s sales forecast for all store locations). MLOps handles batch scheduling, making sure the model runs on the right schedule, and outputs are delivered to wherever they need to go (a database, an email, etc.).

Scalability: A big part of serving is scaling to meet demand. If your e-commerce site suddenly gets a traffic spike during holiday season, you might need more instances of the model service to handle all the requests. Cloud-based MLOps can auto-scale this. Conversely, during slow periods, it can scale down to save resources.

2.3 Monitoring and Alerting

Now that the model is up and running, monitoring is the component that continuously checks its health and performance:

Performance Metrics: These include technical metrics like response time (latency), throughput (predictions per second), error rates (did any requests fail), and hardware usage (CPU, memory, GPU utilization if applicable). For example, if a model’s response time suddenly doubles, it could indicate an issue that needs attention (maybe a change in input data causing heavier computation).

Accuracy Metrics & Drift Detection: Beyond technical performance, we monitor the model’s prediction quality. This can be tricky because in many cases, you might not know the true answer immediately (e.g., if a model predicts which customers will churn, you only know who actually churns weeks later). Still, there are ways: you might use proxy metrics or periodically evaluate the model on fresh data when ground truth becomes available. Drift detection is a key MLOps practice – detecting if the input data distribution has shifted (data drift) or if the relationship between inputs and outputs has changed (concept drift). If your model was trained on user behavior from summer, and now it’s holiday season, the data patterns might be quite different. Tools like Evidently AI (open source) or built-in features in platforms like Azure ML can compare statistical properties of current data against the training set and flag significant differences.

Alerts: When monitoring systems detect anomalies – say, the model’s accuracy on recent data dropped by 10%, or simply that the service is down – they will trigger alerts. Alerts are typically sent to the ops team via email, messaging apps, or dashboard notifications. For an SMB with a small team, it might be an email or a Slack message at 2 AM telling the on-call person something needs fixing. The alerting thresholds are usually configurable: you decide what constitutes a big enough deviation to warrant a human check.

2.4 Logging and Versioning

MLOps involves meticulous logging of what the model is doing and comprehensive versioning for reproducibility:

Logging Predictions: In many cases, you’ll log the inputs a model received and the outputs it produced (within privacy limits) along with a timestamp and model version. This log becomes a goldmine for debugging and analysis. If later someone says “why did the AI make this recommendation?”, you can trace it back.

Version Control for Models: Just as software has version control (e.g., v1.0, 1.1, etc.), models do too. MLOps dictates that every time you retrain and deploy a model, that new artifact is versioned and stored (often in a model registry repository). This way, if the newest model has issues, you can roll back to a previous version quickly. It also helps for auditing – you can demonstrate which model was in use at a particular time and what data went into training it.

Data and Code Lineage: Versioning isn’t only for the model; it extends to the data and code. It should be clear which code (training script version) and which dataset (or data slice, with date ranges, etc.) produced the model. Many MLOps tools automatically capture this. For example, MLflow can save a run with parameters, code version (via a Git commit hash), and data references. This ensures that if you needed to recreate a model from six months ago, you could – an important capability if regulators or clients challenge an outcome down the line.

In sum, deploying and monitoring models via MLOps is a multi-faceted process. It’s like running a mission control for your AI – overseeing the launch (deployment), the flight (serving predictions), and keeping an eye on all systems for any corrections needed (monitoring and alerts). For SMBs, implementing all these components might sound complex, but as we’ll see next, there are tools and platforms that bundle a lot of this functionality, making it much more accessible than it was just a few years ago.

3. Tools and Platforms Enabling MLOps (Accessible Options for SMBs)

The good news for small and mid-sized businesses is that you don’t need to invent MLOps tools from scratch. A vibrant ecosystem of platforms and software has emerged to make MLOps easier. Here, we’ll focus on tools that are both popular and viable for SMBs – including cloud solutions and key open-source projects.

3.1 Managed Cloud MLOps Platforms

Major cloud providers offer integrated MLOps suites. These are attractive for SMBs because they reduce the need for in-house infrastructure and expertise – you’re essentially renting battle-tested tools at a fraction of the cost of building them.

AWS SageMaker: Amazon’s SageMaker is a fully-managed service covering the whole ML lifecycle. For MLOps specifically, SageMaker provides features like SageMaker Pipelines (for CI/CD workflows of data prep, train, deploy), Model Monitor (which can automatically detect data drift in your deployed endpoints), and SageMaker Clarify (which helps monitor biases in data and predictions). An SMB can use SageMaker to, say, train a model on their sales data, deploy it as a secured API endpoint, and let Model Monitor alert them if incoming data deviates from the training data profile. All of this can be done through Amazon’s interface or automated via scripts – no need to maintain your own servers for it. Pricing is pay-as-you-go, which is budget-friendly for smaller deployments.

Google Cloud Vertex AI: Google’s Vertex AI is a unified platform that combined their AI Platform and AutoML tools into one. It provides an end-to-end solution: you can ingest data, train models (including AutoML for those who want to train models without coding), deploy them, and monitor with Vertex AI. A standout feature is the integration with Kubeflow pipelines (for those who need more flexibility) and a built-in Feature Store (to manage and serve ML input data consistently). SMBs might leverage Vertex AI to deploy, for example, an image recognition model and use Google’s efficient infrastructure to serve predictions globally with low latency. Vertex AI also includes drift detection and can sync with BigQuery for logging model predictions and analyzing them.

Azure Machine Learning (Azure ML): Microsoft’s Azure ML offers robust MLOps capabilities. It has an MLOps framework that integrates with Azure DevOps or GitHub Actions to enable CI/CD for ML. You can register models in the Azure ML model registry, deploy them to Azure endpoints (or even to edge devices via Azure IoT Edge integration), and enable Application Insights for monitoring the model’s performance in real time. Azure ML’s designer is a UI that some SMB teams might find useful to visually create pipelines. Also, if your company uses a lot of Microsoft stack products, Azure ML fits nicely with those (e.g., data can stream from Azure Data Factory, and outputs can go to Power BI dashboards).

Other Cloud Options: There are other notable mentions like IBM Watson Studio, Oracle Cloud AI, etc., but AWS, Google, and Azure cover the vast majority of use cases. Additionally, specialized services like DataRobot or H2O.ai offer platforms that include MLOps features (auto-deployment, monitoring), often using an AutoML angle. These can be useful if you want a solution where much of the pipeline is abstracted and you’re okay with a more black-box approach – sometimes preferred if you don’t have data scientists on staff.

Why Cloud MLOps for SMBs? Because it lowers the barrier to entry. Instead of hiring a team to integrate dozens of tools and maintain servers, you can log into a console, follow guided workflows, and have an ML pipeline running in a short time. Cloud platforms also continuously update their features (like adding new algorithms, better monitoring, security patches) without you having to do anything – a huge plus for a small business that can’t dedicate a lot of time to tool maintenance. The trade-off is cost (beyond a certain scale, cloud can get expensive) and some loss of control/flexibility. But for most SMB-scale projects, the cost is manageable and the speed of implementation + reliability pays off.

3.2 Open-Source MLOps Tools

For those who want more control or to avoid cloud vendor lock-in (and possibly save costs at scale), the open-source world provides many building blocks of MLOps. These tools can often be used in conjunction with cloud (e.g., running an open-source tool on cloud infrastructure) or on your own servers.

MLflow: Originating from Databricks, MLflow has become a popular open-source platform for managing ML experiments and deployments. It has four main components: Tracking (log parameters, metrics, artifacts of model training runs), Projects (packaging code in a reproducible way), Models (a standard for packaging models that can be deployed to different platforms), and Model Registry (to manage model versions and stages like “Staging” or “Production”). An SMB team can use MLflow Tracking to keep records of various models they’ve tried, then promote the best model to the registry and deploy it. MLflow doesn’t do heavy-duty deployment by itself, but it integrates well with tools like Docker or cloud services to deploy the model artifact.

Kubeflow: Kubeflow is an open-source project originally started at Google, which aims to make Kubernetes (a container orchestration system) a viable platform for machine learning. It’s like an out-of-the-box ML toolkit that runs on Kubernetes. Kubeflow includes components for model training (TFJob for TensorFlow, PyTorchJob, etc.), Jupyter notebooks for development, Pipelines for workflow automation (similar in concept to SageMaker Pipelines or Azure ML pipelines), and serving (KFServing, now part of KServe, for deploying models on Kubernetes with scaling). Using Kubeflow can be cost-effective if you already use Kubernetes (or don’t mind setting up a Kubernetes cluster which can now be done easily via cloud-managed Kubernetes). It provides a lot of flexibility and is very powerful – but it might require more DevOps know-how, which not every SMB has readily.

Airflow / Prefect / Luigi: These are general workflow orchestration tools (Airflow by Apache is the most popular, Prefect is a modern alternative, Luigi by Spotify is another) not specific to ML but often used in MLOps to schedule and manage complex pipelines. For instance, an SMB could use Airflow to orchestrate a nightly job that does: data extraction → preprocessing → model retraining → testing → if OK, register new model and trigger a deployment. Airflow will let you define this pipeline with dependencies and it will take care of execution and alerting on failure.

Model Serving Tools: There are open-source tools specifically for serving models at scale. TensorFlow Serving is one for serving TensorFlow models efficiently. TorchServe does similar for PyTorch models. Seldon Core is an open-source platform to deploy models (not tied to one ML framework) on Kubernetes, supporting advanced deployment patterns (like A/B tests, canary deployments). BentoML is a toolkit to build and package model APIs quickly. These can be handy if you want to deploy models in your own environment or on cheaper cloud compute instances without using the full higher-level services.

Monitoring and Data Science Tools: For monitoring model performance and data quality, open source offers solutions like Evidently (which can calculate drift and other metrics, producing interactive reports), WhyLogs (for logging and analyzing statistical properties of data in production), and Great Expectations (for validating data quality before feeding it to models). These aren’t one-size platforms, but can be integrated into pipelines to add specific MLOps functionality.

Choosing Tools: The choice between managed platforms and open-source (or a mix of both) often comes down to your team’s expertise and specific needs:

If you have a very small team and speed is essential, managed cloud services might be the best – they handle many concerns automatically.

If you have some engineering resources and the need for flexibility (or cost sensitivity for long-running processes), combining a few open-source tools might give you a tailored solution. For example, you might use MLflow for experiment tracking, Airflow for orchestration, and deploy models to a simple Docker container on AWS ECS or Azure Container Instances.

A hybrid approach is also common: use cloud for some parts (like training with managed auto-scaling compute, or using a cloud feature store) and open-source for others (like using your own CI/CD system and custom monitoring dashboards).

Importantly, the tools should serve the process, not dictate it. It’s easy to get lost in the sea of options. For SMBs, a pragmatic approach is to start with a minimal set that covers the basic needs (perhaps one experiment tracking tool + one deployment method + basic monitoring via cloud watch or custom code) and then evolve as your usage grows. Remember, fancy tools are not a silver bullet – what matters is implementing the practices of MLOps (versioning, testing, monitoring, etc.) in a way that your team can manage.

4. Best Practices for Implementing MLOps in an SMB

Implementing MLOps is as much about process and people as it is about tools. Below are best practices and practical steps tailored for SMBs looking to get started with MLOps:

4.1 Start with a Pilot Project

Rather than attempting a company-wide MLOps transformation overnight, identify a single pilot project:

Choose a project that is feasible in scope (not the most mission-critical system just yet, but something that matters). Maybe it’s a customer churn model for your subscription service, or a demand forecasting model for a subset of products.

Define what success looks like (e.g., “If we can reduce manual work by X%” or “if model deployment time goes from 3 weeks to 3 days”).

Use this project to implement end-to-end MLOps on a small scale. Document the process as you go. This will serve as a template for future projects and also produce a success story to get buy-in from others in the company.

4.2 Involve Stakeholders Early

MLOps, by nature, spans multiple roles. Early in the project:

Bring IT/DevOps into the loop: If you have an IT team or even one person managing your systems, involve them when designing how models will be deployed. Their expertise will ensure the solution fits with your company’s tech stack and security protocols.

Align with Business Objectives: Make sure the business side (product manager, department head, etc.) knows what MLOps entails and how it will benefit them. For instance, explain that by deploying the model with proper monitoring, the sales team will get more reliable predictions they can trust, and if anything goes wrong, the system will catch it.

Set Realistic Expectations: MLOps isn’t a magic button that instantly makes AI perfect. It’s an investment in quality and efficiency. Ensure stakeholders understand the timeline and ongoing nature of it (e.g., “We’re putting in this pipeline which will take 4-6 weeks to set up, but after that, each update will be much faster and less prone to error”).

4.3 Automation and CI/CD from Day One

When implementing the pilot, try to automate as much as possible from the beginning:

Use a source code repository (like Git) for all model code and data pipeline code. Have a process in place to merge changes and perhaps trigger automated tests (for example, have a small script that trains the model on a sample of data to see that everything runs).

If possible, set up a simple CI/CD pipeline. Even using something like GitHub Actions or GitLab CI (which are often free for small projects) to run training or deployment scripts on each commit can instill discipline. For example, each time new code is pushed, run a workflow that retrains the model (maybe on a small dataset) and runs basic accuracy checks. This catches issues early.

Automate model evaluation: define clear metrics that matter to your business (accuracy, precision, recall, RMSE, etc. depending on the problem) and have the pipeline output these. Consider setting thresholds to decide if a model version is “good to deploy”.

4.4 Embrace “Infrastructure as Code”

Even if you’re using cloud services, treat your MLOps setup like software:

Write scripts for setting up resources (for example, a Terraform script to create a storage bucket, a compute cluster, and a database). This way, if you needed to replicate the environment or recover from an outage, it’s faster.

Use configuration files for things like hyperparameters, data paths, etc., so that changes don’t require altering code, just updating config (which is less error-prone).

Document the pipeline: As part of code or in a README, document what each step does and how to run it. This helps when new team members join or if someone has to take over when the primary engineer is out.

4.5 Monitor not just the Model, but the Pipeline

Often we emphasize model monitoring (which is essential), but also monitor your pipeline’s health:

If you have scheduled retraining, make sure there’s alerts if a retraining job fails or doesn’t run on time. You don’t want to quietly stop retraining and not realize your model is now 6 months out of date.

Track how long each part of the pipeline takes. If training time or prediction latency starts creeping up over weeks, it might signal a need to optimize (or maybe your data size has grown – which is a good thing, but then you adjust resources).

Watch costs if using cloud: A misconfigured pipeline can sometimes rack up compute costs. Many cloud tools allow setting budgets or at least sending alerts when cost exceeds a threshold. It’s a best practice to keep an eye on this, especially for SMBs with tight budgets.

4.6 Security and Access Control

As you operationalize, ensure you’re not opening new vulnerabilities:

Limit who can deploy new models. Ideally, deployments are done through the pipeline (triggered by, say, a merge into a “main” branch that only authorized people can approve). This prevents ad-hoc changes that bypass validation.

Secure the model endpoints. If it’s an internal tool, keep it in a private network or behind authentication. If it’s external (e.g., part of your product), ensure standard web security (HTTPS, authentication tokens, etc.). Cloud MLOps services usually integrate with their security tools (like AWS IAM roles).

If using sensitive data, consider encryption (at rest and in transit) for data storage in the pipeline. Also, anonymize or avoid logging sensitive content when logging model inputs.

4.7 Continuous Learning and Adjustment

MLOps is still a developing field; encourage a culture of learning:

Have retrospectives after each model deployment cycle. What went well? What broke? Use that to improve the process.

Stay updated on new tools or features. For example, if you’re using a certain platform, maybe they introduce a new drift detection feature or a simpler integration – keep an eye out as it could reduce your manual work.

Invest in training the team. Even a small team can benefit if one member takes an online course on MLOps or attends a workshop, then shares knowledge. The more comfortable your people are with the concepts, the smoother it will run.

4.8 Don’t Overcomplicate – Scale As Needed

A trap in MLOps is trying to implement a level of sophistication that you might not need initially:

You might not need a feature store on day one if you have just one model. Maybe a well-structured database table serves the purpose for now. You can introduce a dedicated feature store when you have multiple models sharing features.

You might not require an advanced model explainability tool for every project – unless you are in a regulated industry or facing customer questions about “why did the AI do that?”, a basic log of feature importances or SHAP values might suffice to start.

Aim for MVP (Minimum Viable Pipeline): the simplest pipeline that achieves deployment, basic monitoring, and basic alerting. Get that working, deliver value, then iterate.

By following these best practices, SMBs can implement MLOps in a manageable, stepwise fashion. The key is to remember that MLOps is a journey, not a one-time setup. Start with a solid foundation and keep improving it as your AI initiatives grow. The payoff will be seen in how quickly and reliably you can go from an idea to a deployed model delivering ROI.

5. Real-World SMB Case Studies in MLOps

Nothing drives home the value of a concept like seeing it in action. While large tech companies often dominate the headlines, many smaller organizations are quietly succeeding with AI thanks to MLOps. Let’s explore two real-world-inspired case studies that illustrate practical challenges, solutions, and results for SMBs implementing MLOps.

Case Study 1: Healthcare Startup – Enhancing Patient No-Show Predictions

Business Context:

HealthyAppointments Co. is a regional healthcare startup running a chain of clinics. Missed appointments (no-shows) were a costly problem – they led to idle staff and lost revenue. The company built an ML model to predict which patients might not show up, so they could send reminders or fill those slots in advance. The data science team had a workable model with decent accuracy. The challenge was deploying it to dozens of clinics and updating it as patient behavior evolved (seasonality, new patient influx, etc.). The company has an IT team of just 3 people and one data scientist – classic SMB constraints.

Challenges:

Integration with Clinic Systems: The model needed to pull data from the appointment scheduling software (which was also evolving) and output flags to the front-desk interface used by receptionists.

Resource Constraints: With a small team, they couldn’t afford a complex custom MLOps build. They needed something that fits into their existing cloud setup (they were using AWS for other IT services).

Data Drift: The model’s accuracy dipped during an experiment – upon investigation, the data scientist realized that as the clinics opened new locations in different neighborhoods, the patient demographics changed, affecting the no-show patterns. They needed a way to catch these shifts.

MLOps Solution:

HealthyAppointments turned to AWS SageMaker for deployment. They containerized the model using SageMaker’s easy deployment tools and set it up as an endpoint. The MLOps pipeline was configured as follows: Every month, SageMaker would retrain the model on the latest 3 months of appointment data (via a scheduled job). They used SageMaker Model Monitor to track a few key features – e.g., average appointment wait time, patient age distribution – to detect drift. When drift alerts fired (for instance, when a new clinic opened in an area with younger patients, the age skewed younger than the training data’s distribution), the team would be prompted to retrain ahead of schedule or check if the model’s performance still held up. For integration, they didn’t overhaul their clinic software; instead, they used an API call. The receptionist’s app was modified (with minimal coding) to call the SageMaker endpoint whenever a new appointment was booked or the day before a visit, and it received a “no-show risk score.”

Results:

Within 2 months of deploying via this MLOps pipeline, the model was in use across all clinics. The no-show rate dropped by 25% – receptionists could proactively call high-risk patients or double-book slots that were likely to free up. This translated to an approximate $200k annual uptick in revenue across the chain, a significant sum for a small business. Just as importantly, the staff reported confidence in the system: the model’s predictions were trusted because they noticed it rarely “missed” a no-show. And whenever it needed improvement, the MLOps alerts caught it. For example, when COVID-19 vaccines became available, a lot of scheduling dynamics changed; the system alerted a drift as many more appointments were getting canceled/rescheduled. The team quickly retrained the model with post-vaccine rollout data, maintaining accuracy. In short, MLOps turned a one-time model into a continuously improving service, integrated seamlessly with operations. The single data scientist and IT trio were able to manage this because the heavy lifting (scaling, monitoring) was handled by their chosen platform.

Case Study 2: Specialty E-commerce SMB – Dynamic Pricing Model

Business Context:

PriceRight Gadgets is a specialized e-commerce SMB focusing on consumer electronics accessories. With about 40 employees, they compete with bigger players by being nimble in pricing and inventory. They developed an AI model to optimize pricing of products based on factors like demand, inventory levels, competitor pricing (scraped from the web), and even social media trends. The model was promising in simulations – it could potentially increase margins while still keeping prices attractive. The obstacle: implementing this in their live online store in a controlled way. They feared scenarios like the model mispricing items or not reacting fast enough to market changes, which could either anger customers or leave money on the table.

Challenges:

Real-Time Requirements: Prices needed to update perhaps daily or even intra-day for certain fast-moving products. Any downtime or error in the model could directly affect sales and reputation.

Testing and Safety: The company wanted a safety net – a way to ensure the AI wouldn’t do something crazy like price a $50 item at $500 by mistake. They needed rigorous testing and a fallback mechanism.

Multiple Data Feeds: The model took in data from various sources (their sales database, Google Analytics for web traffic, competitor price feeds). Managing these data pipelines and ensuring they were current was part of the deployment challenge.

MLOps Solution:

They opted for a mix of open-source and cloud solutions. For the data pipeline, they used Apache Airflow to schedule and orchestrate data fetching (e.g., scraping competitor prices every hour, loading sales data daily). The model training was done on a reserved EC2 instance (Amazon cloud VM) nightly, orchestrated by Airflow as well. They containerized the model and used Docker/Kubernetes on AWS (via Amazon’s Elastic Kubernetes Service) to handle serving. This might sound heavy, but they managed it through a simplified approach: one Kubernetes cluster running a few services, including the model API and a simple rules-based service as a fallback.

The deployment strategy was key: They used a canary deployment approach via Kubernetes. When a new model was trained and ready, it would initially serve only, say, 5% of the traffic (5% of product page views would get prices from the new model, others still saw old pricing strategy or last stable model). Airflow and their monitoring setup would compare the sales metrics and error logs. If all looked good (no weird price outliers, sales conversions steady), they would gradually shift more traffic to the new model. If something looked off, they had an automated rollback – Kubernetes would route all requests back to the last known-good model (or in worst case, to a simple rule: don’t change prices by more than ±5% from baseline).

For monitoring, they focused on business metrics and model outputs: an alert if any price recommendation was out of a reasonable range (caught by a simple script scanning outputs), and business KPI dashboards (conversion rate, revenue per user) segmented by which model version was serving them. This combination of technical and business monitoring ensured the AI couldn’t silently harm performance.

Results:

After a few months, PriceRight Gadgets had a robust dynamic pricing engine running. Thanks to their careful deployment and MLOps practices, they increased profit margins by around 8% without sacrificing sales volume – in fact, volume grew due to more competitive pricing on certain items. The CEO noted that this system gave them an edge in flash sales and seasonal promotions; they could respond to market changes within hours, something unheard of before. They also slept better knowing there were controls in place. During one incident, their competitor feed went haywire (pulling wrong data), which could have led the model to misprice items – but the monitoring caught unusual outputs (like an $0 price suggestion due to bad input) and the system automatically fell back to safe rules until it was fixed. That kind of resilience is exactly what MLOps is about. This case demonstrates that with the right planning, even a small e-commerce player can safely run an AI-driven feature that directly impacts revenue, all without a dedicated “ML engineering” team – they had a couple of savvy engineers and part-time data science consultant managing the whole pipeline through smart tooling.

Case Study 3: Local Financial Services Firm – Credit Risk Model Deployment

(One more example to illustrate another domain, as optional reading.)

Business Context:

ABC Credit Union, a local financial services SMB, developed a machine learning model to improve their loan approval process. The model assesses credit risk of small loan applicants (combining traditional credit scores with other data like transaction history, employment stability, etc.). The goal was to approve loans faster for low-risk applicants and flag high-risk ones for manual review, thereby reducing default rates and speeding up service for good customers.

Challenges:

Regulatory Compliance: Being in finance, they had to explain decisions to regulators. Any model decision that led to a loan rejection might be scrutinized for fairness and correctness. So logging and explainability were big concerns.

IT Infrastructure: They operated mostly on-premises due to data sensitivity (customer financial data). They needed an MLOps approach that could be deployed in their own data center, leveraging existing hardware.

Team Skills: The firm had a couple of software engineers and one statistician who learned machine learning. None had extensive DevOps experience. They were concerned about how to maintain this system long-term.

MLOps Solution:

They chose an on-prem MLOps setup using mostly open source. They installed MLflow on their servers to manage the model lifecycle – the data scientist used MLflow Tracking to record experiments and the Model Registry to version the approved model. For deployment, they containerized the model with Docker and used a lightweight container orchestration (Docker Compose initially, and later Kubernetes as they expanded). They set up a REST API service for the model within their internal network, so the loan application software could call it for a risk score.

To address compliance and explainability, they integrated SHAP (Shapley Additive Explanations) values computation into the pipeline. Whenever the model made a prediction for an applicant, it also output the top factors that influenced that prediction. These were logged in a secure database. This way, if an applicant was denied and regulators or the customer inquired, they could retrieve a human-readable explanation (e.g., “Loan denied due to very high credit card balances and recent delinquencies”). As part of MLOps, they treated this explanation component as part of the model’s output to monitor as well (e.g., if the model ever started citing a weird factor, that would be a red flag something is off).

They scheduled model retraining for once a quarter, given loans have seasonal and economic cycle influences. Using MLflow’s registry, they had a staging area where a new model would be tested on a month of recent data to see how it would have performed vs. actual outcomes. Only after passing those tests (ensuring it wasn’t biased or less accurate than the current model) would it be promoted to production. This promotion was done with one click in MLflow, which then triggered a Jenkins job (their existing CI tool) to deploy the new container.

Results:

The credit union successfully reduced loan default rates by about 12% after implementing the model, while also increasing the speed of approvals for low-risk loans (many got instant approval, improving customer satisfaction). Importantly, they passed a regulatory audit where they showcased their MLOps-driven process: auditors were impressed by the level of tracking (they could show which model version was used for any given decision and why that decision was made). Internally, what could have been a fragile process became a well-oiled operation – each quarter when a new model is trained, the team confidently evaluates and rolls it out knowing checks and backups are in place. This case underlines how MLOps brings not just efficiency but also accountability to AI in business, which is crucial in domains like finance.

Summary of Case Insights:

Across these examples, common themes emerge: start with a clear business goal, use MLOps to systematically deploy and oversee the model, and prepare for change (data drift, market shifts, etc.). The SMBs leveraged a mix of managed services and open-source tools appropriate to their context. Each faced limited resources and high stakes, and in each, MLOps practices turned those constraints into a structured workflow that delivered real results – from revenue gains and cost savings to risk reduction and compliance.

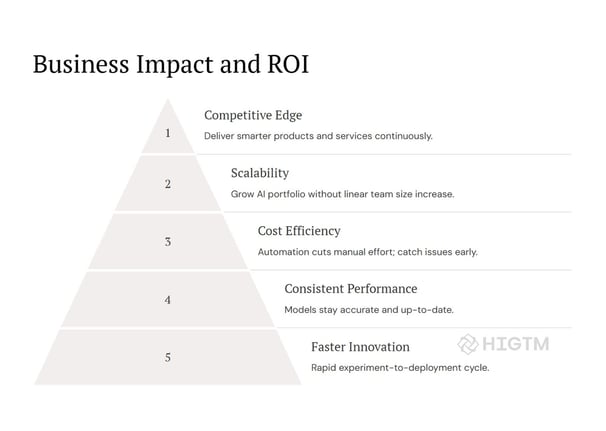

6. Business Impact of Embracing MLOps in SMBs

After understanding the processes and seeing examples, it’s worth summarizing the business impact of implementing MLOps for an SMB. At the end of the day, executives need to justify any new practice or technology in terms of ROI, risk management, and strategic advantage. Here’s how MLOps measures up:

6.1 Accelerating Time-to-Value

One of the most immediate benefits is speed. With a proper MLOps pipeline, the cycle of deploying improvements or new models is much faster. Instead of lengthy handoffs between data science and IT, and instead of deployments turning into “mini-projects” of their own, deployments become routine and quick. This means:

You can capitalize on opportunities faster (e.g., deploy a holiday-specific sales model just for the season and then roll back).

You can respond to adversities faster (if a model starts failing, you catch it and fix it before it causes much damage).

Essentially, the ideas from the whiteboard reach the market or the end-user in record time, which for an SMB can be the difference between leading a niche or lagging behind competitors.

6.2 Improving Model Performance and Lifespan

MLOps directly contributes to keeping models performing well over a longer period:

Continuous Improvement: With monitoring and scheduled retraining, models get better with time or at least maintain their performance. This contrasts with the old approach of “train once, deploy, and forget” which leads to models decaying and eventually being useless. Continuous improvement means you extract more value from the original investment in developing the model.

Extended Lifespan: A model developed today could, with proper MLOps, still be in use a year or two later (albeit as an evolved version of itself after many small updates). This longevity means the upfront cost of development is amortized over a longer useful life, improving the return on investment.

6.3 Operational Efficiency and Cost Savings

Automation = Efficiency: By automating repetitive tasks (testing, deployment, monitoring), you free up your human talent to work on higher-value activities (like developing the next model or analyzing insights for strategy). Especially in an SMB where people wear multiple hats, this efficiency is crucial.

Reducing Failures and Downtime: Proactive monitoring catches issues early, which can avoid expensive downtime or mistakes. For example, if an AI model in a supply chain starts giving wonky forecasts, catching that drift early prevents a scenario where you massively overstock or understock because you blindly trusted a deteriorated model. Such mistakes can be very costly; MLOps acts as a safety net.

Optimized Resource Use: MLOps can enable dynamic allocation of resources. Scale up computing when needed, scale down when idle. This ensures you’re not overspending on infrastructure. Also, by tracking performance, you might discover that a lighter model or cheaper setup works just as well during certain periods – enabling cost trimming. Many businesses find that after initial implementation, the ongoing costs of serving the model can be optimized thanks to usage data collected.

6.4 Enhancing Trust and Transparency

For executives and stakeholders, a monitored and well-managed AI system is inherently more trustable:

Transparent Workflows: MLOps encourages logging and documentation. Stakeholders can get reports on “what the model did this week, and why.” This demystifies AI for the business side. Instead of a black box that folks are wary of, it becomes a well-instrumented tool that people see the inner workings of (at least at a summary level).

Accountability: If something goes wrong, you can trace it. If a customer dispute arises (“Why was my loan denied?”), you have records and can respond confidently. This accountability prevents small issues from becoming major reputational crises. It also fosters a data-driven culture: people trust the numbers because they know those numbers are being double-checked by the system.

Regulatory Compliance: As noted in the case study, being able to prove you have control over your models and data is a big plus in regulated industries. But even in unregulated spaces, consumer protection laws and ethical standards are pointing toward more scrutiny of AI (think of GDPR in Europe and how it mandates explaining automated decisions). MLOps sets you up to meet these expectations with less scrambling.

6.5 Enabling Scalability and Future Growth

When your SMB adopts MLOps for one project, you’re not just solving that project – you’re building infrastructure and skills for the future:

Multi-Model Scaling: Once the first model is in production with MLOps, adding a second model is easier. You already have CI/CD, monitoring tools, etc., in place. Each subsequent AI initiative will be faster and cheaper to implement because of this head start.

Talent Attraction: Surprisingly, having modern practices like MLOps can help attract or retain talent. Data scientists and engineers prefer working in environments where they can push their work to production efficiently and see real impact, rather than having their models shelved due to “deployment issues.” It shows that the company is serious about AI and not just dabbling.

Competitive Differentiation: Over time, your ability as an organization to reliably deploy AI can become a differentiator. For example, if you’re an online retailer known for quickly adapting prices or trends, customers notice the responsiveness. Or a healthcare network known for efficient operations (because AI is optimizing schedules and resources behind the scenes) stands out in patient satisfaction. These competitive edges might be subtle, but they accumulate.

6.6 Risk Mitigation

Finally, MLOps mitigates several risks that might otherwise accompany AI projects:

Project Failure Risk: We saw earlier that a large portion of AI projects never make it to production. By instituting MLOps, you dramatically improve the odds of success for each AI project. You essentially de-risk the investment in building the model because you’ve paved the path to get it deployed and kept alive.

Financial Risk: Poorly governed models can make bad decisions that cost money. With MLOps, you have early warning systems and controls to prevent a rogue model from causing big losses. It’s akin to having financial auditors – you might never need them if everything goes well, but if something’s off, their presence is invaluable to catch it.

Opportunity Risk: On the flip side, missing out on opportunities is a risk. MLOps ensures you can seize opportunities by quickly trying out new models and features. In a fast-moving market, the risk of not doing something timely can be as severe as doing something wrong. A nimble MLOps capability cuts that “time-to-test” and “time-to-launch,” lowering the risk that you’ll be late to the party on a new trend or need.

7. Conclusion: Getting Started with MLOps in Your Business

Embracing MLOps is a technical journey, but at its heart, it’s a business strategy – one that ensures your AI investments translate into tangible outcomes. For SMB executives and decision-makers, the key takeaway is that MLOps is not out of reach. You don’t need a massive team or a multi-million dollar budget to start. With cloud tools and smart planning, even a small company can deploy AI models like the “big leagues.”

If you’re looking to get started:

Educate and Align: Make sure your leadership team and key technical staff understand the value of MLOps. Use some examples even quoting that “80% of models never deploy” stat) to highlight why this matters. Align everyone on the goal: to make AI a sustained success, not a one-hit wonder.

Pick a Pilot and Plan: Choose a project that you will use to pilot MLOps practices. Map out the steps from data collection to model training to deployment and monitoring. Identify which tools you’ll use at each step (leveraging many suggestions from sections above). Keep the plan lean.

Leverage Experts and Services: If your team is new to this, consider a consultation or training (there are many MLOps specialists and also lots of free online resources). Sometimes an initial consulting engagement (yes, with firms like HIGTM or others) can accelerate your design of the pipeline and avoid common pitfalls. They can tailor best practices to your specific context quickly.

Iterate and Scale: Implement the pilot, learn from it, and then broaden to more use cases. Treat your MLOps pipeline as a living product – continuously enhance it. Maybe the first iteration has basic monitoring; the next iteration adds advanced drift detection; later you add a feature store, and so on as needs grow.

Foster a Data-Driven Culture: As the technical side gets sorted, encourage use of these model insights in decision-making. Highlight wins (e.g., “our new model deployment improved sales by X” or “the monitoring alerted us and we avoided a problem”). This helps reinforce the value of the approach to all stakeholders, securing ongoing buy-in and investment.

In conclusion, MLOps is about making AI work for you consistently and reliably. It’s turning the sporadic lightning strikes of AI inspiration into a steady current of innovation powering your business. For SMBs, it levels the playing field – allowing you to punch above your weight by deploying sophisticated AI solutions with confidence and control. The world of AI is moving fast, but with MLOps in your toolkit, you’ll be well-equipped to move with it and harness its power — one deployed model at a time!

Turn AI into ROI — Win Faster with HIGTM.

Consult with us to discuss how to manage and grow your business operations with AI.

© 2025 HIGTM. All rights reserved.